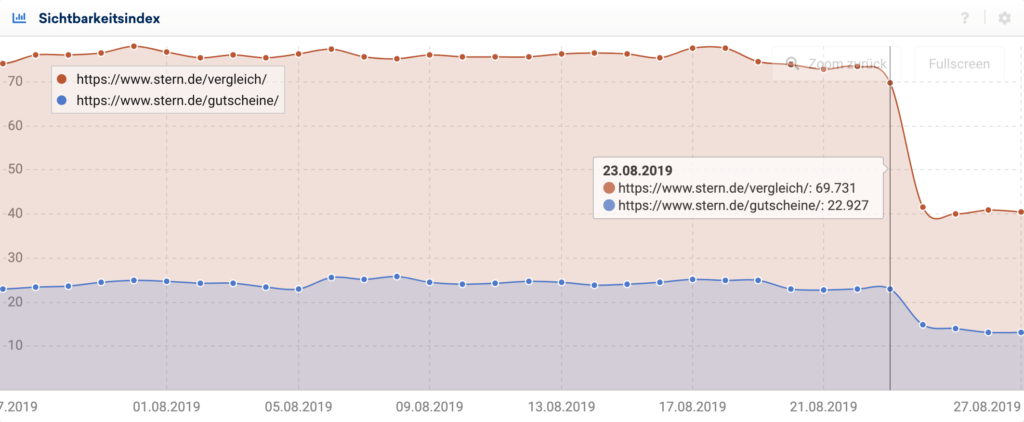

- On Friday the 24th of August, Google has demoted commercial content – mostly coupon and review sections – across several publishing assets worldwide

- This drastic measure has been preceded by a single anonymous Twitter/Medium user (@LoisH), repetitively trying to point Googlers’ awareness to these sites

- After countless attempts by LoisH to raise awareness for this topic, incl. submitting a list of targets, Google has given in and said it’s seeking to address the topic

- Google might have missed its objective to create better results for the user as now search results are in fact worse

- Google also might have deprived many publishing houses of revenues that were crucial to their survival, as publishers seek to diversify revenue streams

The recent actions of Google were a first in many aspects. Not only has Google’s action followed a series of anonymous posts and outings, extensively researched by one single user who calls himself LoisH. But it has also exclusively targeted a specific business model (commercial content) in an isolated industry (publishing). Many of the “targets” that have been denounced by LoisH have now lost a double-digit percentage of their search traffic.

So what happened?

As of last Friday (August 24th 2019) Google has demoted several instances of Commercial Content – more specifically so-called “white labels” aimed at creating couponing or review content under the umbrella of a publishing brand. These cooperations, usually based on a revenue-share model, have completed the content offerings of publishers, while at the same time generating significant revenues through affiliation with eCommerce players.

In a previously announced move (see tweet above), Google has now limited the ability of these offerings to rank well within Google’s search results, without explaining why these wouldn’t be favourable for the user or quality of search results. Most likely these sections have previously benefited from the authoritative status of publishing assets as well as the trustworthiness of their brands.

Now that these cooperations create significantly less traffic than before, a previously build up a diversified source of revenue for publishing houses, has drought out and is likely to impact the commercial viability of publishing in the long run, once more.

Commercial Content is essential for digital revenues of publishers

According to my personal knowledge (and hearsay), commercial content has previously represented between 20 and 40% of digital revenues across several publishing houses and assets. Also being aware of the general challenges that these enterprises face, it’s easy to predict the consequences after Google’s most recent swing at the industry. We’ll see more journalists losing jobs, more editorial departments being closed and ultimately less high-quality journalism in the long run.

But let’s not start at the end, but the beginning…

An anonymous Denunciator calling out Google for action on a specific business model

Since late June of 2018, an anonymous Twitter and Medium user calling him or herself “LoisH” has created “evidence” of an “Exploitation of Google’s Algorithms”, helping two German companies to “Make Millions”. The targeted companies are the Munich/Berlin-based “Global Savings Group” and the Hamburg-based “Savings United”.

These companies operate a global network of own couponing websites and white-labels, employing hundreds of people and – yes – (likely) generating millions of revenues for retailers, publishers and themselves while providing consumers with the most recent coupon codes and savings content.

The coupon industry is defined by a general lack of diversification as almost every player has access to the same content and the intent of the user is rather shallow (“find me the best coupon for retailer X”). While industry players have repetitively tried all strategic approaches and technical tricks to sustain and improve their rankings, the (search) results appeared to be almost random and unpoliced by Google to me as an experienced SEO, having seen dozens of industries and hundreds of companies.

Hence, seeking for options to create long-term value, more and more players have engaged in a common strategy, looking to provide their content under the umbrella of a well-known media brand. Throughout his pseudo-forensic work LoisH tries to suggest these cooperations are a short-term tactic, “piggybacking” on an authoritative domain, gaming algorithms and promoting duplicate content.

The Identity of LoisH

Given the fact that the recent action has been provoked by the filings of a distinct fake-account, understanding the nature and intentions of LoisH is a topic of its own. Scrolling through his twitter profile you can get a sense of the energy, frequency and work he’s willing to submit to this cause and how he desperately tries to engage with industry experts, of which some fall for his ideas.

There is quite a lot of circumstantial evidence LoisH himself isn’t a stranger to the couponing industry, but much more likely an industry veteran, deeply frustrated by his failure to adapt to the current ecosystem.

The sudden emergence of his anonymous profile coincides with the main protagonists entering a certain European market, his knowledge of the industry and its players, the tools he’s facilitating to forge evidence and hide his real identity, but most importantly the lengths he’s willing to go to in order to provoke a strike at the targets, says a ton about his intentions and identity.

A genuinely concerned user has no reason to hide his identity and could still easily tweet at Danny Sullivan or John Mueller to point to a critical quality issue with search. But LoisH is a hideous coward, who’s dedicated his life (or at least a significant part of his time) to denouncing competitors as a last resort to seek justice for failure in the market. LoisH wants Google, the SEO-community (which he’s actively trying to pull into his thinking) and the world to envy innovative market participants creating more useful and successful solutions for their potential profits.

Are Coupon Players Piggy-Backing on Authoritative Domains?

You should obviously read for yourselves, but in a nutshell, the issue that LoisH is trying to raise is the following:

- The overall authority of a domain, is a strong ranking signal within Google’s algorithm(s)

- Newspapers, among government authorities, universities and other facilities of science and education are probably the most authoritative domains according to the simple, yet effective original PageRank algorithm.

- By renting/leasing a space (folder or subdomain) on these hostnames for a monthly fee, a content provider might seek to promote it’s content into positions it otherwise couldn’t achieve

- Additionally, but not necessarily, the promoted content might be identical or similar to other assets of the same commercial website, henceforth creating a “duplicate content” or “diversity” issue.

- LoisH repetitively claims that other voucher sites like RetailMeNot and it’s UK subsidiary, Groupon and other smaller sites suffer a lot from this “unfair competition”.

- LoisH provides a list of media assets incl. their commercial content cooperations and partners, looking at best like preparing work and evidence for Google, at worst like a wishlist for penalties

A more informed view on the topic

This all reads very logically, and especially as an SEO, always caring for a level playing field and having an inner urge for “fairness” you’ll be inclined to follow the above assumptions. They are aimed to invoke exactly this. Even some otherwise well-known and competent publications jumped on the story as well and are also partly supporting these allegations.

But here’s why I disagree and what most of the commentators have gotten wrong so far… (IMHO)

1. To Google, fair shall be what benefits the user

Arguing about fairness doesn’t get us anywhere. You could just as well argue a competitor hiring a top-notch-SEO, running better content marketing, or getting access to exclusive promotions isn’t fair. Ultimately then, any Search Engine Optimisation is interfering with and hampering the success and viability of competitors.

We have to accept that SEO, as long as it’s in line with Google’s guidelines, is a legit discipline in order to promote your product. And by the way, Google itself has without a doubt stated this model is not infringing their quality guidelines at all:

So as the user’s needs, the markets and the algorithms evolve, we have to accept that some will benefit and some will be washed out. And just like with any algorithmic change, those who succumb will claim unfairness while those who prevail get to earn the fruits of their hard work. We have to accept, the definition of SPAM for those who succumb will always be “Sites Presented Above Mine”. If you believed you were running your business in the right way, when you didn’t, being taught a lesson by Google must seem wrong and unfair to you.

Personally, I’ll always be fond of updates and algorithm changes as they are the most effective way to clean the market and create value for the user. As long as Google’s changes target those who fail to adapt and work on their products day and night, I’ll stay supportive of any algorithm changes, washing out the worst of my competitors while making room for new and innovative players.

BUT: The changes of last Friday – they are reactionary and conservative. They seek to demote what the Google Algorithm has found to be the best solution for the user. They seek to re-establish an ecosystem that has already failed. They seek to reverse progress.

2. The search for harm – Where’s the damage?

To someone not familiar with the coupon industry, promoting much to similar content under different brands, making use of the trust of media brands, must sound like a tremendous SPAM effort. If you’d be in the market to buy sneakers, packed travel offerings or just news on politics, finding a diverse landscape with many significantly different offerings is crucial for your happiness and the efficiency of your research. Diversity is a value-driver and duplicate content a detractor – no question.

But the coupon landscape is a special animal. Basically, any provider – through affiliate networks or direct partnerships – can get access to a 95% identical pool of coupon codes of virtually any retailer in the world. In other words: coupon codes have been completely commoditized, with very few exceptions they are universally accessible and while the sole intent of the user is to find their sought-after code as fast as possible, the sole value of a coupon site is to present the most valuable code to the user in the most frictionless way.

Beyond the name of the brand, the colour of the site, the ease of redeeming the coupons, and some other personal preferences, there’s no way you can think of to differentiate your product in a meaningful way. In other words: It’s almost irrelevant which coupon website is surfaced to you by Google, unless it would be deceptive or fraudulent, and Google seems to have treated this segment in a consequential way.

Given my personal experience, results often looked random, sometimes paradox, mostly un-policed and it was hard to determine how to secure long-term success in this niche. On the other hand for the user, all the frequent changes didn’t seem to impact quality too much as they were at any time able to find their coupon codes, if existent.

Also given the high volatility in the market, the majority of participants and at all times, diversified themselves by running more than just one platform, so if in case the rankings changed, they had another shot at the market and didn’t have to hire and fire people on a monthly base. Other players relied on a “churn & burn” strategy, regularly burning through new domains and brands, retaining authority through redirects, but moving onto a new domain several times a year.

In short: There never was real product diversification in the coupon industry, and duplicate content isn’t a tactic by one of the players, but a feature inherent to the whole industry within companies and across the whole market. Due to the interchangeable nature of its participants, even the oldest coupon “brands” that have been around for decades still heavily rely on search traffic.

3. But how to create value in a commoditized market?

In my role as an angel investor and advisor I’ve worked with more than one company in the couponing space and – DISCLAIMER – am still a minority shareholder in one of the targeted companies. When asked for SEO or strategic input, the best advice I could think of in a totally commoditized industry is to create a real long-lasting USP (i.e. access to exclusive offerings, or early access to existing coupons) or a significantly better user experience, banking on Google’s algorithms to learn which product serves the user best in the long term. I’ve always advised companies to do so and later learned these wouldn’t only make sense with regards to SEO, but are in fact the classical approaches to commoditized markets.

However, even the companies putting significantly more resources and attention to detail into their products still didn’t accomplish to create enough meaningful (and recognizable) value to sustain top rankings for a long time. As the best user experience is to visit the coupon site for a minimum amount of time and not to engage beyond the friction-less redemption of the coupon itself, anything you’d have in mind in order to improve the UX would not even get the chance to be used.

As a result of the research dynamics of the user, those who were ranking on top would lose their rankings over time as users bounce and continue to seek better coupons. And those websites in lower positions, which could make the user give up one visiting even more identical websites, would eventually rise to the top one day, just to learn they are in the hot seat now. In that regard, the Google Algorithm was kind of transparent here, given a bit of background knowledge. However, there was no measure coupon players haven’t tried to change that typical behaviour of the user and the algorithm’s answer to it. Yet, no one succeeded to escape the pogo-sticking.

4. Brands – The answer to anything?

There was one more thing no one thought about. A third way to create value in commoditized markets: Put a sticker on it.

Most people don’t know how and why brands emerged. We know they are kind of a marketing trick and shall signal value, a certain image or quality standards to consumers. These assumptions are alright. They all make sense. But the original and macroeconomic value of brands is another key mechanism:

A brand can reduce the transaction costs, particularly the cost of search and information, or research cost in short.

In order to find the best or preferred beer, whiskey or cola in a saloon in a different town, you’d have to taste through a lot of nasty beverages before you get to enjoy a drink you’ll like. In order to find the jeans that fit you best, every time you’d be in the market for a new pair of jeans, it would cost you a lot of time trying on dozens of pants. In the worst case, it would make the whole transaction so unenjoyable and cumbersome, that the consumption of the sought-after good might not outweigh the burden of research and the cost of acquiring necessary information so that the transaction as a whole could become negative-use. If you’ve ever spend 15 minutes on finding an active coupon for your 10 bucks Deliveroo meal you get a sense of what I’m talking about.

And that’s why entering a cooperation for providing content together with a media brand under their brand can contribute value to a user, far beyond the short-sighted primary ranking benefit. As users trust certain media outlets more than random coupon sites with obscure names, the trust in the editorial oversight and authority of these brands might encourage users to trust their findings and go with the coupon these cooperations have provided for them.

If a user trusts these sites a bit more, or a fraction of users trusts them a lot, these white labels do create value for the user by saving them not only money but something much more valuable: TIME. We’ve all been in a situation where we’ve clicked through more than one Google result because we didn’t trust that one source ranking first, especially but not limited to live-and-death queries like medical or financial advice. We also know we might have saved lots of time if a more authoritative source or author would have presented the same information.

The value of content (and coupon codes are basically a form of commercial content) is not only defined by the information it contains, but also the probability that its information is correct. If some random dude on Twitter would post something on SEO, you’d have to do lots of research to validate his findings. If Rand Fishkin or Glenn Gabe would post the same thing, you’d assume it’s right and usually save a lot of time hence. It’s also the reason why LoisH tries to engage SEO Experts in his play. Who (but Google) would put any trust in an anonymous Twitter account?

Knowing Google does a great job and gets better every day at observing the user’s behaviour and preferences, we have to assume the white labels do not only rank well because they are benefiting from the “SEO trust” or “link juice” of these domains. They are borrowing their authority in a much broader way and creating value for the user, which then gets recognized by Google’s algorithms, who learn that these sites might create better experiences, far beyond things an SEO wants to think about.

Most importantly, there’s no proof that these white labels have created any harm for the user or her search experience. The search results have neither promoted poor quality nor worsened the user experience at all. Quite the opposite, they have likely improved the research process and generated welfare on a macro-level. Now interfering with the results of the sophisticated Google Algorithm will certainly flush up lower quality sites again while destroying consumer’s surplus.

5. Why editorial oversight might be better than Google oversight

Whoever goes as far as calling the well-maintained while label cooperations dangerous or fraudulent to the consumer, has either not seen the previous result pages or must be out of his mind as they are so clearly benefiting the consumer.

As a result of the intense cooperation between publishers and commercial content providers, many publishers have established quality standards and content provides strive to deliver high-quality products to ensure the endurance and profitability of the liaison.

Some publishers were able to build up own commercial content, like Insider Picks or El Pais Escaparate. Some have acquired commercial content partners or set up departments to engage with external providers. Diversifying digital revenues through commercial content has become a key strategy for growth and survival and often it’s the publishing houses, who are initiating such joint ventures by screening the market and reaching out to content providers in order to engage in a cooperation at limited cost and risk.

Coming to terms for such a joint venture in fact is much more complicated and time-consuming than just wiring a few hundred bucks to a media outlet. So once such cooperation is agreed upon, both parties have a high incentive to create long-term value and maintain high-quality standards. Hence, the average quality of such white labels usually was far higher than the market average.

6. You can take a horse to water, but you can’t make it drink

Even if you’d follow the suggestion that the commercial content only ranked well in Google due to being hosted on authorities domain, you’re neglecting two important facts:

- Not all of these cooperations were as fruitful as LoisH target list would suggest. For each white label gaining relevant market share, there were two which didn’t amount to anything. Just like it always was the case in any other industry in the pursuit of organic traffic. Only those who maintained a high quality compared to their peers, integrated well with publishers and stuck to their standards actually thrived.

- As Google has become almost perfect at tracking the user’s intent, experience and satisfaction, you wouldn’t actually believe one could surface an absolutely shitty product to millions of users and it would stay there just because it was hosted on a generally trustworthy domain, do you?

7. Should Google interfere with its algorithms at all?

I will hands-down admit the vast majority of Google Updates and Core Algorithm Changes for the organic results have improved the search quality quite clearly over time. And as said before, I’m welcoming every time Google sets the bar higher for SEOs and rewards those who try to improve their products for the user.

Speaking at conferences, I’ve repeatedly stressed how I believe Google does an excellent job at understanding users preferences and behaviour and in my humble opinion, user experience and satisfaction have become by far the most dominant ranking factor nowadays.

Hence, I fail to imagine how a targeted interference based on LoisH blacklist could remotely improve search quality. We’re talking about almost arbitrarily demoting certain commercial content folders within media outlets, while not acting on other folders, other forms of content or the same content on other domains. How can such an interference – incepted by an anonymous fake-account – be superior to the years of data and conclusions of Google’s Algorithm including all its signals and user feedback?

Those pages have ranked, as Google’s Algorithm has deemed them the most appropriate answer to these queries while factoring in user’s behaviour and expressed intentions. They have proved to be the best content in the best context at the right time. Not because I think so – but because the algorithm, which is doing a pretty darn good job in general, has found them to be the best answers.

8. White Labels – The new trick?

Another big misconception when arguing about the pros and cons of such cooperations is the novelty and urgency being raised by those with interest in a sanction.

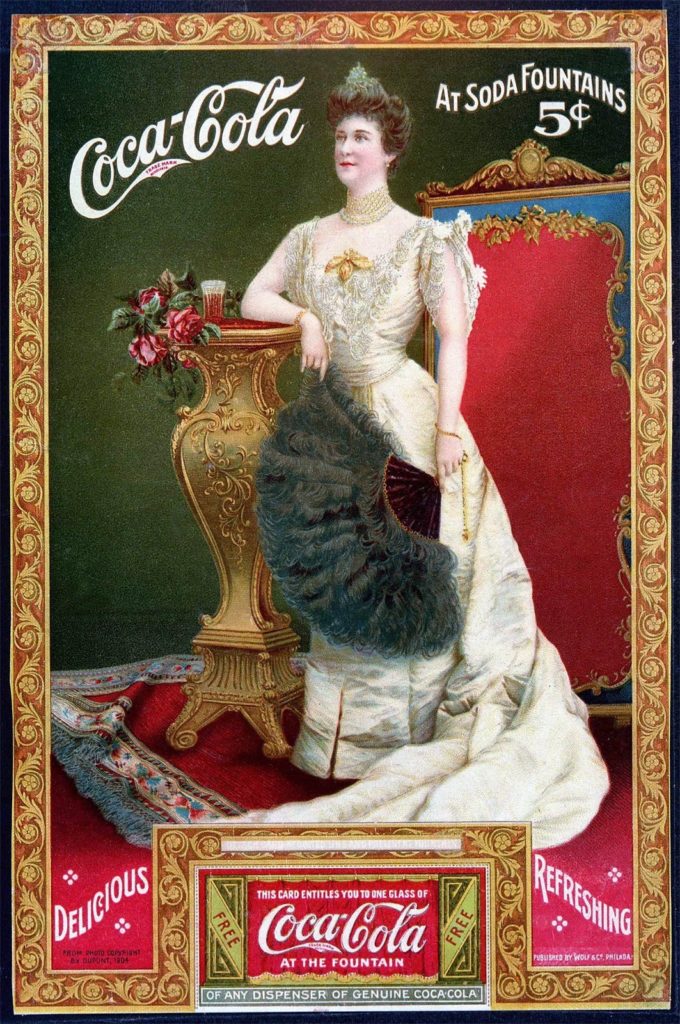

For Starters: Publishing and Couponing have been a good marriage for a looooong time. Newspapers and magazines used to publish coupons since the 19th century and still do.

Reading the news, consumers would cut out their favourite coupons using scissors. They would carry around coupons as a reminder and later redeem their discount opportunity, while local shop owners could lift sales and as a by-product could track the efficiency of their print advertising. Think “19th century Google Analytics”. 😉

But suddenly people find it strange that publishers would offer couponing sections or couponing websites would seek to integrate with publishing.

I understand that renting a random subdomain or folder isn’t the same thing, but that’s not what’s happening in general. Most commercial content providers and publishers do much more than that. They are cooperatively developing a concept for commercial content, using “on-brand” look & feel, actively send users to their commercial sections when appropriate and apply their own quality standards.

Beyond this, news aren’t only not foreign to coupons or commercial content, but news by definition are a general authority. The sections of a newspaper spread from politics to sports, from business to travel, from culture and Feuilleton to real estate and jobs. Since their inception news are deemed relevant in general and their content has been a diverse mix of topics and interests. There’s no reason why they shouldn’t publish coupons or why they wouldn’t be deemed relevant for commercial content.

The Main Course: Licensing-out brands is not a new thing either. I hope this isn’t news for you, if so – sorry for breaking it to you – but your Prada Sunglasses are not actually from Prada and your Hugo Boss Parfume isn’t from Hugo Boss either. Also, almost all lipsticks brands are produced by the same Italian company that nobody knows about.

While brands typically produce their core product range by themselves or at least source them on their own in some cheap-labour country; most brands license their good name to white-label producers, engage in joint ventures or enter revenue share deals with specialized producers or wholesale-partners.

Who is Google to decide which business model and cooperation should be white-listed and which shall be punishable by Google? Most publishers have classifieds sections of some sort: A job portal, a car market or a real estate offering. A sports section, a weather channel or travel ideas. Sometimes these are integral parts of the publishing house, sometimes they’re separate entities. Sometimes they are provided by external partners. Where to draw the line and how could Google possibly correctly assess the situation?

Companies shall have the freedom to engage in the contracts that fit their needs best without having to fear retaliation. They shouldn’t be limited in their possibilities to monetize their work for no clear reason. They should be able to rely on the rule of law and if need be comply with the Google Guidelines.

But a company with the infrastructural relevance of Google/Alphabet has to hold itself to higher standards. It should make its actions transparent and comprehensible. It should act upon its promises to care for the user or explain why their view of the facts has changed. Under all circumstances, it shouldn’t become a useful idiot and let itself be instrumentalised by a party seeking to denounce its competitors.

A tech company, busy lobbying politicians and scientists around the world not to regulate the internet and to keep a liberal view on things, should be more careful and responsible when interfering with the markets itself.

For Dessert: Now knowing that coupons and publishing aren’t exactly news and neither is selling external products under your own brand, you might think to settle on subdomains or folders of authoritative domains is a new move and media outlets don’t know what they’re doing here.

But the successful liaison between content providers and media outlets – again – has been around forever. Would you argue a weather company providing the weather for a newspaper is abusing their reach or authority? Would you argue the few financial content providers like Bloomberg or IWD are creating duplicate content by providing identical, yet useful data, to most newspapers and finance portals and that data ranks several times in Google’s Top10?

Why have the now worried parties remained silent when newspapers flooded the SERPs with Sodoku-Subdomains or IQ-tests? Aren’t those games and tests all the same? Where’s your diversity now?

But here’s what happened: They might be all the same, but Google has figured out these pages return good user signals and how to rank them accordingly. And as they’re mostly the same there’s no harm anyway. In fact, portals that previously spread malware, breached users privacy or harmed the experience otherwise have been wiped out, finally. Good Job.

9. Arbitrary Action and the Damage Done

So why would Google want to treat this specific case so differently? Under no circumstances, I can imagine Google enjoying being in the position, having to draw a line between legit and questionable cooperations. Which models are white- or black-listed? What should direct their decision? Which signals should be indicative for good and bad behaviour? Wouldn’t it start and end with the user and isn’t that what the algorithm already has found to be right?

Google might want to set an example here. As it has done before. A public scapegoat, hanging a prominent head very high, can save lots of work in order to police the masses. And chances short-term thinking SEOs will double-down on and exploit these strategies are high, indeed. If publishers don’t take care of their brands and assets, if their quality guidelines are too loose, Google’s Algorithm will find out about it anyway.

So why risking an arbitrary move, targeted at a hand full of industries and companies, while giving everything else a pass? How can one thing be alright and the other thing isn’t? And how can webmasters learn about the does and don’ts? Where’s the difference and where the urgency to actively demote better results?

Why has Google considered a punitive wishlist of an anonymous user and executed it almost perfectly while giving lots of very similar cases a pass? What’s the lesson to learn for us? Start denouncing competitors? Google who has previously invested in RetailMeNot thinks coupons are bad? The action taken doesn’t look very algorithmically at all. Else it should have affected many other concepts of commercial content as well. It appears to be targeted at the examples given and almost only for couponing content on publishing sites.

The collateral damage has already been done. Publishers have been robbed of another potential source of revenue. An immensely important revenue stream to monetize their work in pursuit to keep journalism alive.

Some might not have cared enough about the quality of their content. And Google’s Algorithm has found out and ranked accordingly already. But many have just tried to do what’s their job: create useful content and run advertising against it. There’s no right or wrong way to do this. Right is what users will deem useful. And users – via algorithm – have promoted this content to its superior rankings.

Now Google has interfered in a weirdly distinguished way, making it look like a useful idiot. Webmasters have no means of reproducing the logic or learning a lesson. The lesson learned is that it’s not only the work you put into your product that counts, but also how you communicate your ideas to Google and how much awareness you’re able to raise for you ranking issues.

DISCLOSURE: The author holds a minority share in one of the targeted companies (“Global Savings Group”). If you deem this relevant, you should also ask yourself what’s the incentive of anonymous LoisH who invests a hell lot more time and energy into this while neither being transparent about his identity nor interest in the couponing sector.

Searching for Harm - Did Google Take Another Swing at the Publishing Industry?,

3 Replies to “Searching for Harm – Did Google Take Another Swing…”

Comments are closed.